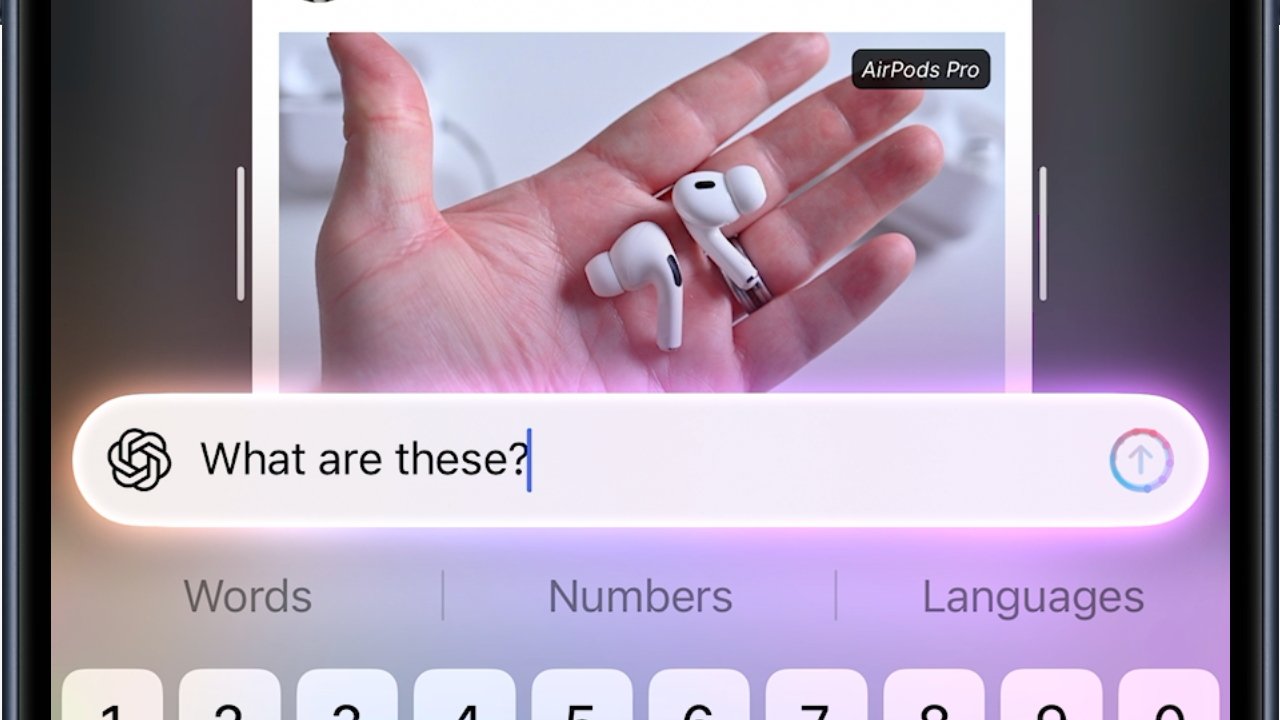

Looking up AirPods from an image on AppleInsider, using Visual Intelligence

Apple has made the smallest update to Visual Intelligence in iOS 26, and yet the impact of being able to use it on any image is huge, and at least doubles the usefulness of this one feature.

Previously on Apple Intelligence.. the iPhone’s Visual Intelligence was about the most impressive feature. Just by pointing your iPhone’s camera at something and pressing the Camera Control button, you could get AI to tell you all about it.

This was an extension to Apple’s previous ability with the Photos app to identify, say, plants. Visual Intelligence goes further in that it can also identify famous landmarks, or often just random buildings you’ve always wondered about.

Where Photos could say that give you the plant’s Latin name, Visual Intelligence could deduce the ingredients in a complicated coffee order.

Then, too, Visual Intelligence can in theory recognize dates on an event poster and put the details into your calendar. In practice it gets thrown by certain wild poster designs, and never point it at a musician’s world tour schedule, but it’s still impressive when it works.

The thing with all of this, though, was that Visual Intelligence involved pointing your iPhone camera at whatever you were interested in. What Apple has done with iOS 26 is take that step away.

Everything else is the same, but you no longer have to use your camera. You can instead deploy Visual Intelligence on anything on your iPhone’s screen.

This one thing means that researchers can find out more about objects they see on websites. And shoppers can freeze frame a YouTube video and use Visual Intelligence to track down the bag that influencer is wearing.

There is an issue that this means there are now two different ways to use Visual Intelligence, and they involve you having to do two different things to start them. That’s even the case despite how in 2024, Apple removed the requirement to use the Camera Control button.

How to use regular Visual Intelligence

To continue doing what you’ve been able to since Visual Intelligence was launched, you still have to first point your iPhone at the object you’re interested in. But then if you set up these following options, you can:

- Press the Camera Control button and hold for a moment

- Press the Action Button

- From the Lock Screen, press a Visual Intelligence button

- From Control Center, press another Visual Intelligence button

If your iPhone has a Camera Control button, it is already set up for Visual Intelligence and you need do nothing. Whether it has this or not, though, you can use any of the other options.

To add Visual Intelligence to the Action Button, go to Settings, Action Button, and swipe to Controls.

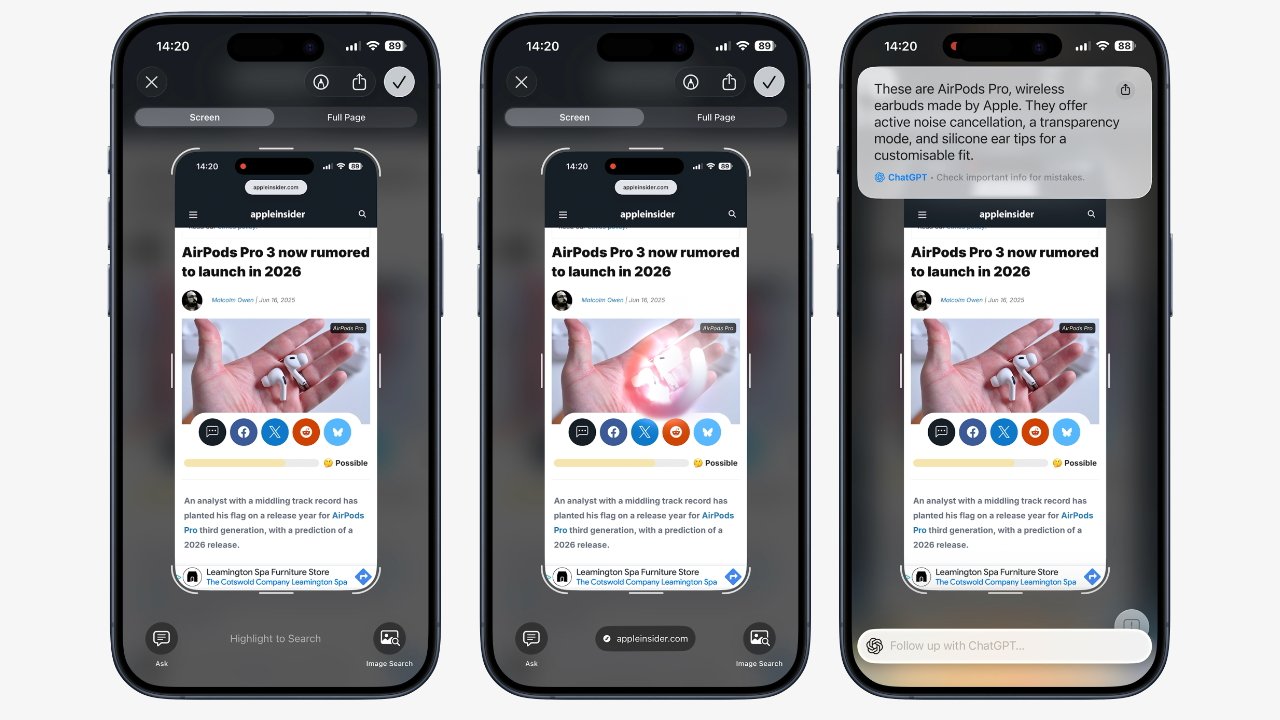

Take a screenshot (left), circle what you’re interested in (middle) and ChatGPT can tell you what it is (right)

Or for Control Center, swipe down to open that, then press and hold on a blank spot. Choose Add a Control and the search for Visual Intelligence.

Lastly, you can also activate the original Visual Intelligence from the Lock Screen. At that screen, press and hold until you get the option to Customize, then add the Visual Intelligence control.

All of this ends up with you being able to use Visual Intelligence the way it was originally intended — and unfortunately, none of it launches the new version.

How to use the new version of Visual Intelligence

This is an extra part of Visual Intelligence, not a replacement. So all of the above remains true and also useful, but to get Visual Intelligence to work on anything on screen, you have to do something completely different.

- Take a screenshot by pressing the volume up and sleep/wake buttons

- Use your finger to circle any part of the image you’re interested in

- Ask a question or do an image search

When you use your finger to circle, or highlight, an area of the screen, it glows with the new Siri-style animation. You can then tap the Ask button and type a question, or swipe up to see the image search results.

Some of those images will be ones for retailers. When they are, you can tap through to buy the item directly.

Apple tried to sell this as being simple, because it uses the same action you do to take regular screenshots. If you’re not in the habit of taking screencaps of your iPhone, though, it’s another set of buttons to learn.

So Visual Intelligence is replete with different ways to use it, one of which provides a very different service to the rest.

Yet being able to identify just about anything on your screen is a huge boon. And consequently Apple increased the usefulness of Visual Intelligence just by not requiring the step where you point your iPhone camera at anything.

Now if only this version of Visual Intelligence could come to the iPad or the Mac.